As businesses accelerate the adoption of artificial intelligence (AI), a new class of digital workers is emerging. These AI agents are no longer just passive algorithms running in the background. They are now active participants in enterprise systems. They make decisions, generate code, interact with customers, and manage data.

However, while these agents gain autonomy, one important thing is often missing: identity.

Enterprises have long invested in identity and access management (IAM) for humans. They also manage machine identities for service accounts and applications. Yet very few are prepared to assign identity to AI agents. This gap is more than a technical oversight. It is a growing business risk.

AI Agents: More Than Just Code

AI agents are different from the tools businesses have used in the past. They learn, adapt, and act on their own. Unlike traditional bots, they are not fixed scripts. They can plan, reason, and carry out tasks with minimal or no human input.

This evolution gives AI agents a new type of autonomy. In a sense, they are digital employees. But unlike employees, they don’t have ID badges, user credentials, or structured accountability. This creates a gap in how organizations manage risk and governance.

The old models of identity don’t apply.

What Happens Without Identity?

Imagine deploying dozens of AI agents across departments. They write code, analyze financial data, automate HR tasks, and handle support tickets. But none are tracked, monitored, or named in your identity systems. There is no audit trail, permissions control, or way to verify who did what.

That’s not a hypothetical scenario. It’s already happening.

Multiple companies have faced real-world issues caused by AI misuse. Some saw confidential data leaked through chatbots, and others suffered reputation damage from unmonitored AI responses. The financial cost of AI-related security incidents averaged over $4.8 million per breach.

The root cause? AI agents were acting with full access, but no accountability.

Why Current IAM Tools Fall Short

Today’s IAM systems are designed for humans. They assume things like passwords, multi-factor authentication, and role hierarchies. None of this applies to autonomous agents that operate via APIs, scripts, or autonomous workflows.

Machine identity systems, while helpful, are also limited. They focus on static assets, like servers or applications. AI agents, by contrast, are dynamic. They can be created in seconds, evolve, and disappear without notice, which is incompatible with static identity systems.

This mismatch creates two key problems:

- Visibility Gap– AI agents operate outside the traditional identity perimeter.

- Accountability Vacuum– No clear owner, logs, or oversight for agent-driven actions.

For business leaders, this means loss of control. Without identity, you cannot govern AI. Without governance, you risk compliance failures, data leaks, and operational breakdowns.

Emerging Tools and Standards

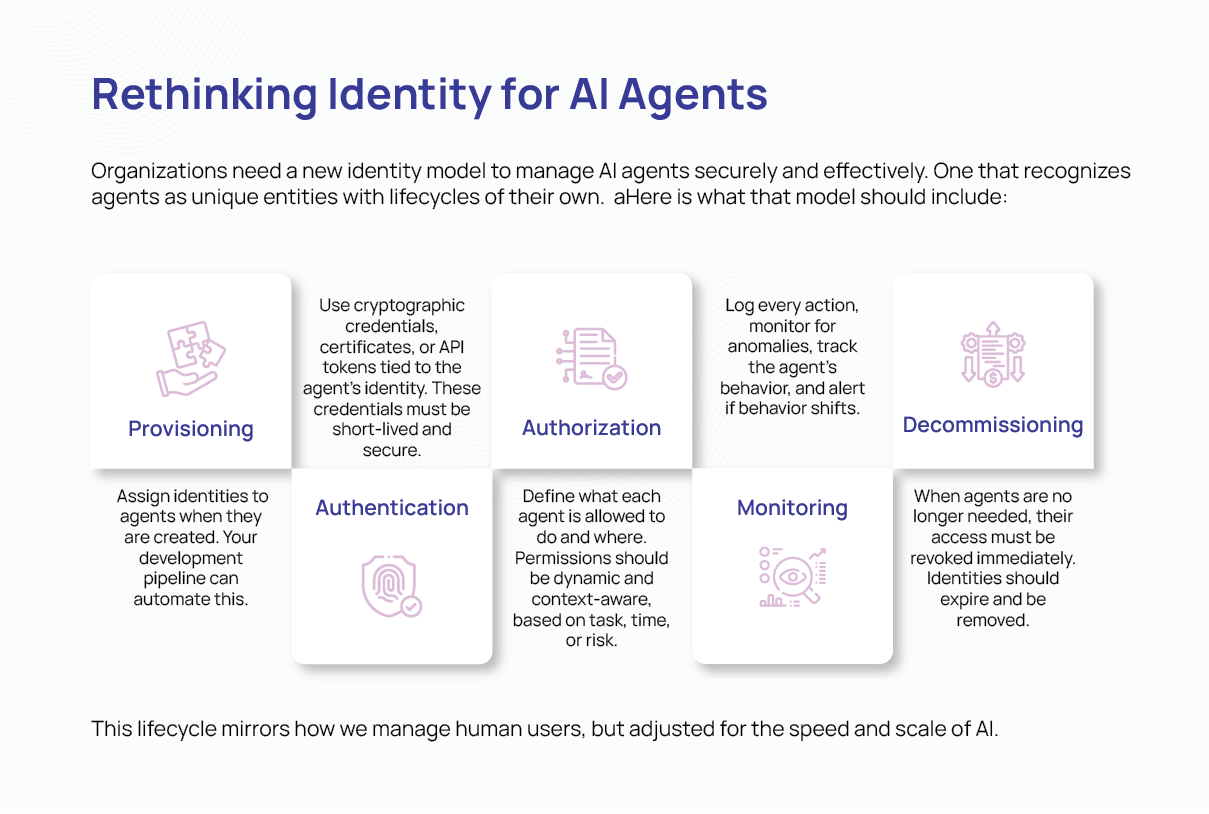

The industry is beginning to respond. Some vendors are exploring AI-specific identity frameworks. There is growing interest in technologies like Decentralized Identifiers (DIDs) and Verifiable Credentials (VCs), which offer flexible ways to prove identity without relying on fixed user accounts.

Microsoft has proposed evolving OAuth protocols to support AI agent identities natively. These innovations are still early, but they point in the right direction.

More importantly, leading companies are starting to build “AI observability” tools. These tools track agents’ actions, decisions, and whether behaviors change over time. This is vital for maintaining compliance and ensuring responsible AI use.

Business Risks and Strategic Opportunities

The message is clear for executives: AI identity is not just a security issue but a business enabler.

Without identity, AI agents are black boxes. With identity, they become transparent, governed, and safe to scale. This means:

- Lower compliance risk– Easier audits, data protection, and regulatory alignment.

- Stronger governance– Clear ownership, logs, and controls.

- Faster innovation– Confidence to deploy AI agents in more business-critical areas.

It also aligns with growing regulatory pressure. The EU AI Act, for instance, requires transparency, traceability, and accountability in AI systems. Other regions are likely to follow.

Leadership Obligations

Business and technology leaders should start by asking the following questions:

- Do we know which AI agents are currently operating in our environment?

- Can we trace the actions taken by each agent?

- Are permissions for AI agents limited and monitored?

- Is identity management part of our AI deployment lifecycle?

If the answer is no to any of these, there is work to be done.

Identity is not a luxury. It is the foundation of trust in digital systems. As AI agents become more capable, they must be treated with the same care and control as human users.

Final Thoughts

The future of enterprise AI is autonomous. Agents will make decisions, take actions, and even manage other systems. However, autonomy without identity is a risk that businesses cannot afford. By building identity into the foundation of your AI strategy, you ensure that innovation remains safe, compliant, and aligned with business goals.

The path forward is clear: If you want to trust your AI agents, you must first be able to identify it.